Resource Health

CloudWatch (AWS), year 2021

Creating a service that allows the user to monitor the health of all their resources on a single page

The challenge

Understanding the real user need

CloudWatch is a product used by DevOps engineers to monitor their applications. The most important aspect of monitoring is having an overview on the health of multiple resources on a single page and being able to slice and dice that information before identifying and deep diving into a particular resource that is having an issue. Product team recognized that this high level overview was missing from CloudWatch since a similar solution was recently released in one of the competitor tools and was getting traction. We wanted to focus on EC2 instances first and then slowly add more resources.

The PM requirement was to "copy" the competitor tool and make the same experience available in CloudWatch without doing any deep dive to understand specific use cases of our customers. Instead of starting from the customer problem their idea was to start from the solution. I highlighted this gap in order to get more time to perform research first before investing development time to build something that our customers might not find that useful. I have been advocating for a while about the importance of following the UX process so the team was easy to convince, but the PM didn't have any capacity to help out so I was given the ownership of the project. My idea was to learn as much as I can about our customer expectations first before getting into the design.

There were three big challenges we faced:

- Wearing many hats in a short time frame: acting as a PM, a UX designer and researcher

- Defining the MVP for a service where everything seemed to be a "must have"

- Pushing for V2 in an environment that never dedicates time for iteration

My Role

- Conducting research to understand customer needs

- Organizing brainstorming sessions and collaborating with other teams from AWS

- Prioritizing user stories and defining the road map

- Creating high fidelity prototypes

- Conducting usability testing

- Collecting and analyzing feedback and preparing for V2

The approach

Concept testing to understand user needs

I only had two weeks to do exploratory research so I used the competitor experience and adjusted it to our environment to quickly test the concept and understand what features were important to our customers. Since we were doing this view for EC2 instances which is another service in AWS, I reached out to that team early to gather past research and understand what customers needed in terms of monitoring. The researcher from that team also helped me recruit the right customers.

I created low fidelity Balsamiq mocks and prepared the interview script. I wanted to learn more about how customers solve the problem today but also get some quick feedback on the conceptual designs. Talking to customers opened some new ideas and I was able to get a lot of insights and understand more about the core problem. I also learned about some competitors features that didn't make sense for our customers and some other ones they really needed:

- Customers didn't find it useful to resize the nodes based on another dimension. The visual representation was too confusing for them so we opted in for sorting instead

- Customers had their own meaning of healthy, so we wanted to give them the ability to set up their own thresholds and choose their own colors to represent those

- Customers wanted an easy access to additional information so we chose to provide quick glimpse of main details on hover

Sharing research results and ideating

I put together a research report with learnings including some customer quotes and quick video snippets. I usually involve stakeholders in the customer calls but this time everybody was on other projects so I needed to find a way to help stakeholders empathize better with the customers and quotes and videos really helped there. I presented the findings to product and development teams as well as EC2 team in order to get their buy in to explore different concepts rather than fix on one solution that worked well for the competitor. I got support to continue exploring different options that were more in line with what our customers wanted and needed, but was again given a tight deadline as the development team wanted to start as soon as possible.

Defining user journey and brainstorming with the team

Research Insights helped me put together a quick user journey as well and it was clear that the user's starting point was not CloudWatch at all but EC2 service and there were many other touch points with different services within CloudWatch as well so I realized I'd need to map these out to ensure we link between those services and embed the information where needed.

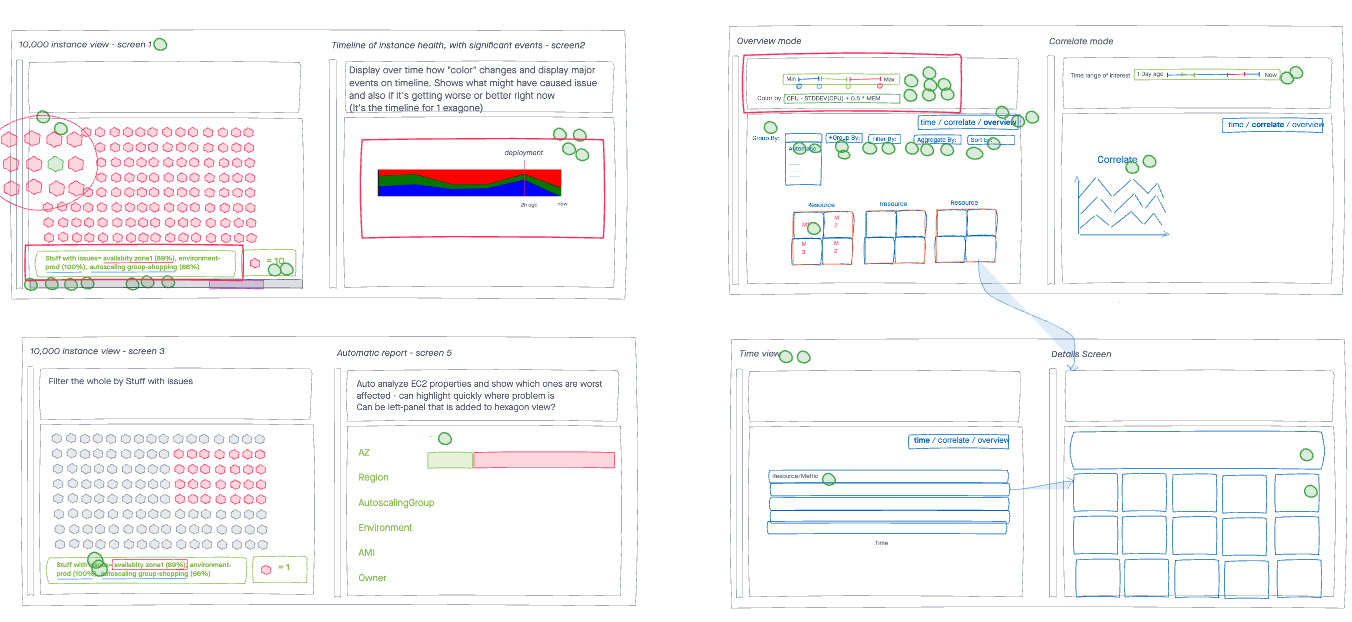

With research insights and high level user journey in place I got the team together to start brainstorming. Everybody was already familiar with the competitor solution but all the insights gathered really helped us think outside of the box and consider different options. I involved the EC2 team in the brainstorming sessions as well to get more insights about the service itself and understand their point of view.

I prepared, facilitated and participated in those sessions. We did crazy 8s and then split into multiple groups to work on a more final solution out of all the quick ideas. In the end we voted on the ideas we felt were the strongest ones. I chose a decider who was listening to the reasoning behind the votes and was in charge to pick the direction based on all the information he got from the rest of the team. The most innovative idea was the "smart recommendation" that would suggest to the user how to group their EC2 instances so that they can correlate the issues more quickly.

Concept brainstroming ideas

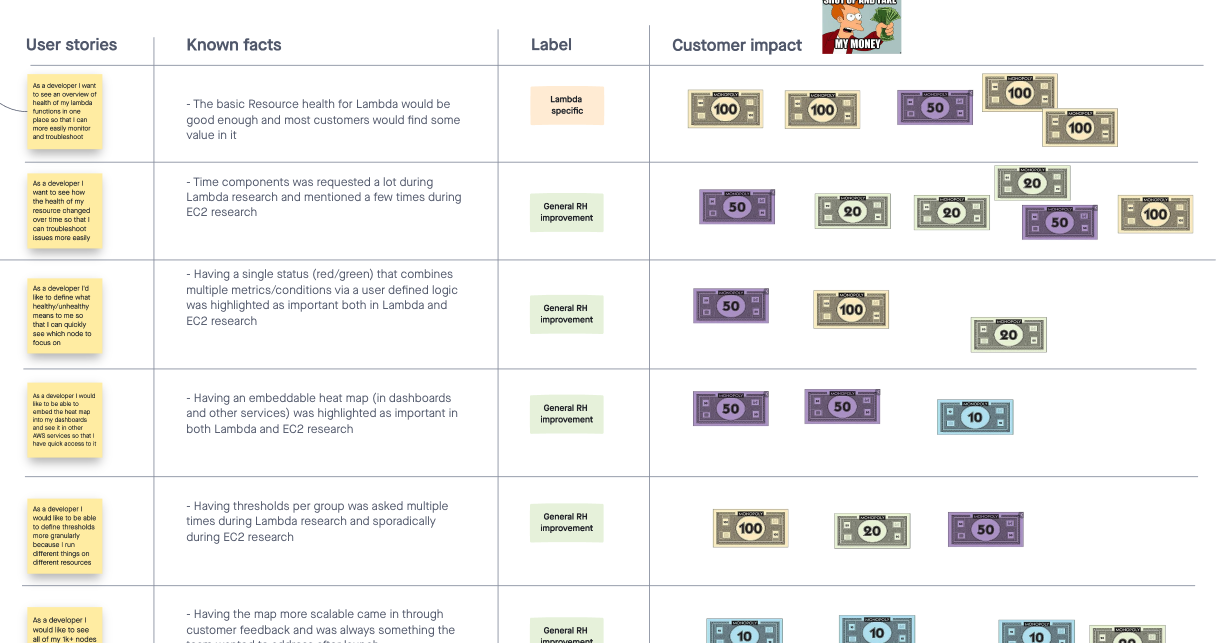

Prioritizing the user stories and feasibility check

We had a lot of ideas on the table with varying levels of feasibility. The team was already behind on some other projects so we really needed to scope this one down into the most basic but still meaningful MVP. I got together with the team and decided to play some monopoly. Each stakeholder was given the same amount of money and they were asked to buy the features they really wanted to have. I included all the features, starting from the most basic ones to very challenging ones to understand first what we felt was the most important and to help us draw the line. Because of the time constraints, I couldn't reach out to external customers but I included 5 internal customers into this workshop and gave them twice as much money so their "votes" counted more.

Creating a road map

The above exercise helped us narrow down and prioritize ideas but we still didn't want to lose sight of all the ideas we had and we wanted to make sure we build enough stories in each area so that the MVP is still fully usable. Together we build a user story map by defining each area of the product and listing all the ideas we had and drawing the MVP line, but also V1 line and defining what needs more exploration as some ideas were very high level and needed more customer input. This was a great exercise that allowed us to see if we were investing too much into certain area of the product while keeping the other area underdeveloped.

MVP needed to be fully usable from the start so even though the team felt really passionate about certain customization options we had to make some trade offs and leave those out. Creating the road map was the most time consuming part of this project as it was difficult to get everyone to agree on the MVP scope. With limited resources we need to make a lot of trade offs so we had several meetings to discuss and iterate. The most proud moment for me was when one of the team members was bringing up a customer quote from research presentation while advocating for a particular feature.

Prioritizing the ideas

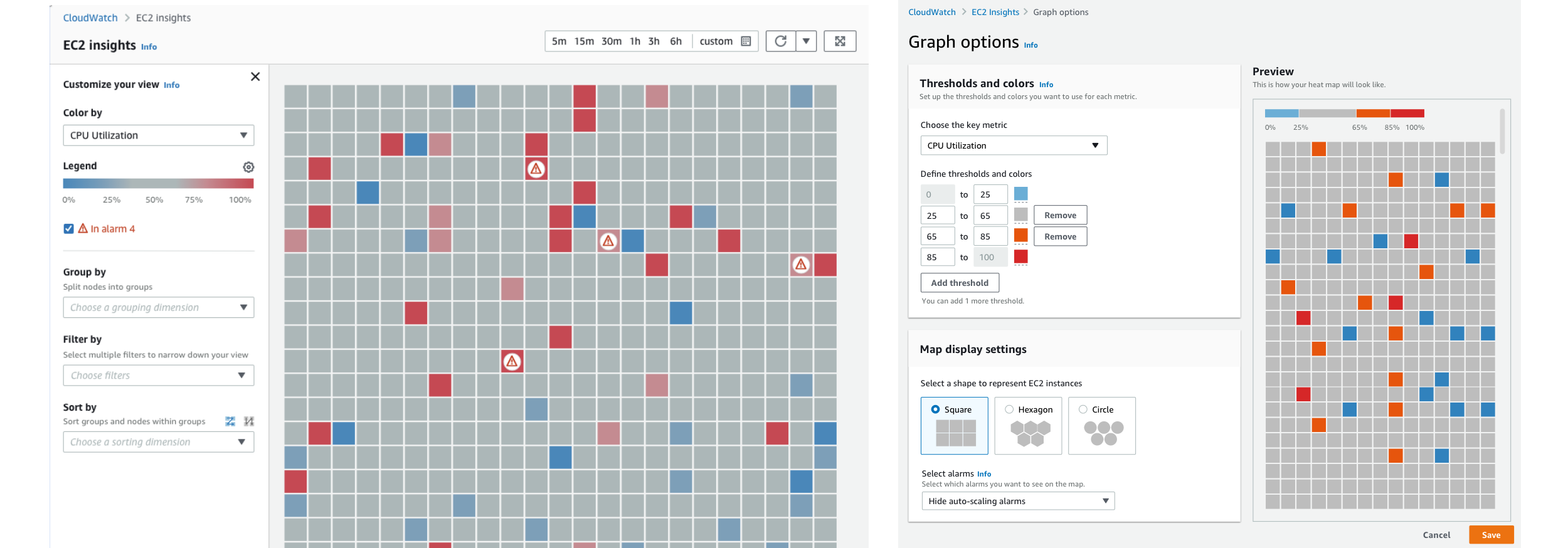

Creating high fidelity prototypes, usability testing and preparing functional doc

I was working on high fidelity designs in parallel with the road map discussions. This sped up the process a lot and allowed me to include more features in the design than what we were planning to build in the first phase. I wanted to do the usability testing sessions on more complete designs so that I don't have to do it twice. This time the team was asking me about usability testing sessions and wanted to shadow and even though we were under a lot of time pressure they encouraged me to take my time with the testing because they could do some backend work in the meantime. During usability testing sessions I got some ideas for improvement which we addressed in the final design:

- users didn't see the settings page so I made it more accessible and easily discoverable

- uses with too many instances were not happy with the limitations so we made sure to include a quick breakdown of thresholds

- users couldn't figure out how to get to the dashboard page so we made that CTA more prominent and clear

I put together a functional doc detailing all the edge cases and different scenarios and behavior in each to make it easier for the development team. I offered support during implementation to answer questions and review the implemented UI to make sure everything is pixel perfect. I also defined client metrics (clicks to track in the UI) and together with the PM we defined success metrics. We decided to use the adoption rate as our main KPI.

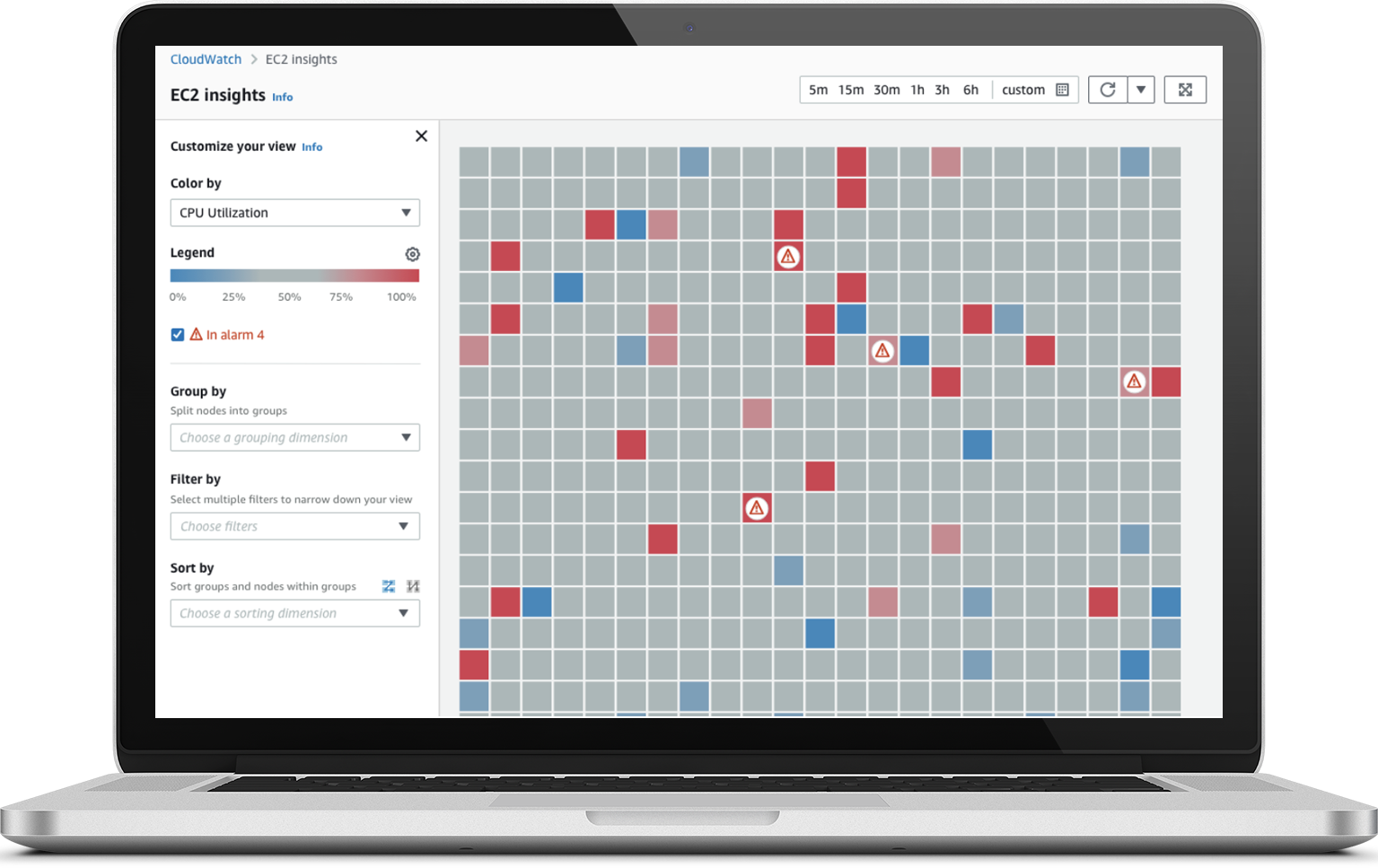

High fidelity wireframes

Final results

Analyzing feedback and defining V2

We first released the MVP to several beta customers - those were internal customers we were working with who were excited to try this out and provide additional feedback. Feedback was positive and besides big feature requests on the things we knew were missing, we didn't get any blockers. After a month in beta, we decided to fully release our MVP. We kept track of feedback that was coming in through the tool and kept an eye on the metrics and adoption rate.

During the first month the adoption rate was already high (10k active customers) and most of them were coming from EC2 (where we in the end embedded the entire heatmap that was serving as an entry point to our service). We didn't receive any negative feedback after launch but there were just few feedback items overall, so I decided to send out a survey to gather additional feedback. In parallel I started preparing for another round of customer calls to dive deeper into those features we knew were missing but were just too big and too ambiguous for our MVP. Development of some of these additional features is currently in progress.

Key Learnings and Takeaways

- Overlooking the entire project and having the ownership really helps understand all the details and be in charge

- Collaborating with other teams is always good even with challenges that dealing bigger team always has

- Get the team excited about working together and involve the same set of stakeholders regularly (we had weekly meetings)

- Validate and iterate again and again based on customer feedback

- Spending more time early one means less ambiguity later on in the project

- Bringing back to the community is a long and tedious process but a very rewarding one